Artificial Intelligence (AI) is one of the most powerful technological forces in this era and, while it began in the data centre, it’s moving quickly to the edge.

NVIDIA’s Charlie Boyle says that one of the biggest things the sector is seeing – which started at the end of 2020 but accelerated into 2021 – is the idea of an AI centre of excellence for companies and institutions.

“There’s a big change from what we were seeing a few years ago. Previously, when people worked on AI, it would tend to start small, getting some results and would grow over time,” he explains.

“We are engaging with a lot of customers today, who have realised that starting very small and growing organically may not get them the results they need in the next couple of years. Before, an individual researcher or a small team may procure one or two systems, a little bit of infrastructure, some networking and storage.

“Now, we are seeing that more at a strategic level inside of the company where, in order to achieve even basic results, management is realising it needs a critical mass of infrastructure to carry out the experiment to drive the applications that they need. Counting on starting small and eventually growing wasn’t really cutting it.”

NVIDIA started its journey many years ago and saw the same thing – individual researchers in the company getting their own infrastructure and having it installed under their desks.

“We made a strategic decision around five years ago that we were going to centralise all of that and just make it as a resource to our entire company, in terms of our own data centres. A year later, we started with our SATURNV Infrastructure. That was originally 125 DGX systems dedicated for our internal use, researcher software and development,” Boyle reveals.

“We quickly found that as soon as we turned that on, it was almost 100% utilised for the first couple of weeks. Today, we have well over multiple thousands of systems that are running just for our own internal use. We are seeing our customers go the same way now, putting down significant infrastructure in the millions of dollars bracket to start with so that they build that centre of gravity for their company.”

AI centres of excellence have grown across the world and it is not geographically limited – it is in all nations and sectors right now. Whether that’s on premise or in a colocation, it is a big data centre trend coming at people this year, says Boyle.

Last year, NVIDIA created an AI laboratory in Cambridge, at the Arm headquarters: a Hadron collider or Hubble telescope for Artificial Intelligence.

“For AI, one of the things that we have seen is, unlike other infrastructure trends in the past, whether it was compute or storage or network, to do good work in AI, it’s not just a single piece of infrastructure,” says Boyle.

“You have to start with very high-speed compute, like we have in our DGX and in our OEM systems – but compute isn’t enough. You have to have the high-speed network interconnecting all those systems to get the best use out of them. As important as the compute and the network is, you have got to be able to get access to the data. That is one of the trends we have seen bleed into the hybrid trend. For AI, data is critical to get your work done.

“Many of these companies and institutions are sitting on massive amounts of data that is already either in their enterprise or in their colocation facility. Our advice is to have an AI centre of excellence as close to your data as possible. If your data is already in the cloud, we can help to build a centre of excellence out of AI cloud infrastructure.”

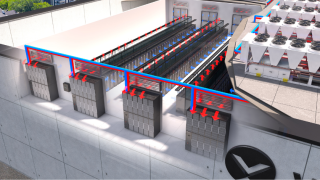

In 2020, NVIDIA pledged an investment of around £40 million in the NVIDIA DGX SuperPOD system, which is capable of delivering more than 400 petaflops of AI performance and eight petaflops of Linpack performance.

“If your data is sitting in a colocation facility or it is on-prem, we can help build that centre of excellence there because getting high-speed access to your data with very little latency really enables your data scientists to quickly iterate their experiments, and that is truly where the innovation comes in,” he says.

“There are many large language models that people are talking about now. Such as being able to talk to a system and have it understand not only the words you are saying but the intent and potentially your situation.

“Some of these data science experiments, doing a single run of them could take a week to compute. If you have only got one system and it takes a week, then your cycle time is a week - when you have hundreds of systems, you can have a lot of experiments going on at the same time to get the best results, so that you can provide that better level of customer service.”

Demand

NVIDIA has a programme where the company works with colocation and data centre providers around the world to make sure that they are ready to run high-power systems. Boyle says that every colocation provider that he is talking to has capacity right now, and they are planning for more capacity this year and beyond.

“That build out of infrastructure and space is happening. There is this great investment happening around the world. The good news for a lot of the customers in this space is there is such a wide variety of very capable colocation providers that are now more accessible to them,” he adds.

Boyle ran large-scale data centres for telecommunication companies a decade or more ago and he says it used to be more difficult for the average enterprise customer or educational institution to actually approach a colocation provider – the language people used was very different.

“What I have seen in the past few years is the colocation environment is not only getting more powerful, but getting more user-friendly, so that it is not a huge hurdle to go from your own internal IT to a very high-end colocation environment,” he explains.

“Everyone is speaking the same language, the standards are widely the same, and it is very easy to set up an environment, even if it was your first time ever approaching the colocation - it is no different than installing a rack in your own server closet or data centre.”

Innovation prediction

The common theme over the last decade was to save every bit of data that you possibly have because it might be valuable. That resulted in massive data lakes and data swamps because it is just sitting there and doesn’t do anything.

“The big trend right now is instead of just sitting on all of that data, analysing the data in as close to real-time as possible to understand what is valuable to you and what you should keep long term,” Boyle concludes.

“One of the trends we are going to witness with big data is all of that data coming from the edge. The faster you can do quick analysis on the edge and separate useful data from the useless ones, will be critical going forward.

“No matter how fast the data pipes are going to get, they are always going to get filled and you want to fill the pipe with high-value data - things that are making your company either more profitable or have a customer experience.”

He mentions that processing at the edge, doing analysis at the edge and only sending back critical data is going to be very timely over the next number of years into the next decade, which ties into the hybrid infrastructure that will enable things in the cloud on the edge and in a data centre – all of those elements need to work together.