If there’s one thing you can say confidently about this industry, it is that it continuously exceeds expectations. Unless, of course, you’re Gordon Moore, the conceiver of what everyone – though not Moore himself – calls Moore’s law.

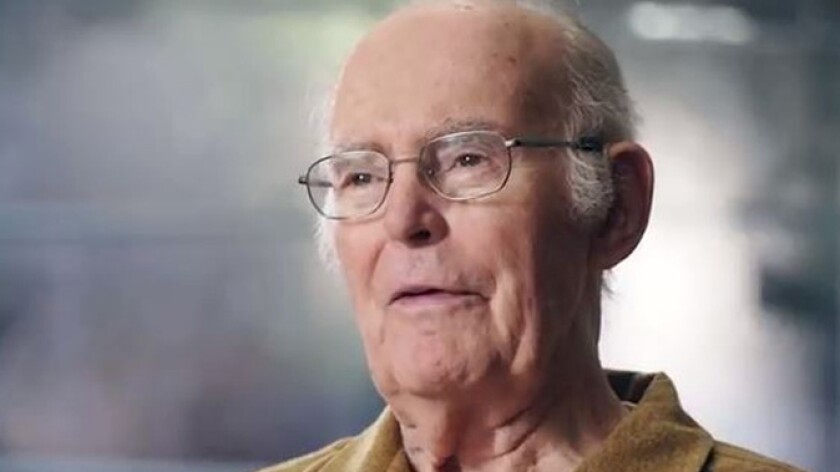

It’s an extraordinary 57 years since Moore (pictured), later one of the founders of Intel, observed that the number of transistors in an integrated circuit was doubling every year – though a decade later, in 1975, he revised his observation to say the number doubles every two years. And, remarkably, it’s held since then.

Cramming in the chips

This is an observation, but Isaac Newton’s law of gravity, published in 1687, is also an observation. Newton backed it up in his book, Principia, with some profound equations. Moore, on the other hand, published his observations in Electronics magazine, in an article titled “Cramming more components onto integrated circuits”.

He wrote in that 1965 article: “Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years. That means by 1975, the number of components per integrated circuit for minimum cost will be 65,000.”

Of course, that’s what’s driven the industry, telecoms, data centres and the rest, and Moore’s law shows little sign of slowing down. Telecoms and data centres have thrived on this bigger, denser, faster, cheaper theme for decades. That’s why your phone has more processing power and storage capacity than was on Apollo 11 in July 1969. That’s why in a few seconds you can search for and read Newton’s Principia as well as Moore’s original 1965 article on your phone or laptop.

Storage has always been one of the key beneficiaries of the advances in technology that are described by Moore’s law. I still, somewhere, have a drive that could take a 3½ inch disc with a storage capacity of 720 kilobytes or, in the super-advanced version, 1.44 megabytes.

Back in the late 1980s a whole word processing program – WordPerfect, for example – would fit a single disc.

Drawerful of drives

I also have a drawer full of USB flash memory drives, the sort of thing that used to be handed out at conferences before we became so aware of security. The oldest, which I later threw away as being more or less useless for practical purposes, stored something like 32 kilobytes. Now, I have one or two that are 4 gigabytes, but I don’t pick them up any more, entirely for reasons of security: our IT department has trained us well. And because most of my important information is backed up in the cloud. But flash drives are growing up, and are getting bigger and bigger. They’re not just for freebies at conferences. They have moved into the professional world of data centres, as solid-state drives (SSDs).

Sadly, Intel – yes, that Intel, co-founded by Gordon Moore in 1968, three years after he wrote that famous article in Electronics magazine – has said it’s stopping making them, but there are others, such as Samsung and Kioxia.

Intel’s announcement of its departure from the industry disconcerted at least one company, Vast Data, that relied on SSDs from it and the others to produce storage for the industry. What Vast Data – great name, by the way – calls “universal storage”.

“You have all your data in one flash storage,” CMO and co-founder Jeff Denworth told me over coffee in a Soho hotel in London. “There are no more tiers.” I nearly spat out my coffee, remembering the “No more tears” slogan on Johnson’s baby shampoo (hint: there were tears, always).

Access time is fast, in real time, Denworth told me when I’d recovered my composure. “You stop worrying about things people worried about for years,” he added.

He believes universal storage will see its main use in artificial intelligence (AI), where you need applications, back-up and archiving. “The biggest systems are more than 100 petabytes,” said Denworth, “and some customers are talking about exabytes. If someone came to us for one, we could do that.”

All those brains

And just how big is 100 petabytes? In a 2010 article in Scientific American, Paul Reber, professor of psychology at Northwestern University, where he has his own Reberlab, suggested the human brain can store around 2.5 petabytes. So, one of Vast Data’s giants is equivalent to 40 human brains.

The industry has been looking for ways to build and run huge file systems for years, and one of the big challenges has been the risk of failure. Buy more nodes to increase the storage, and the risk of failure increases.

And more nodes mean more traffic shunting data around the system. Back in the days when networks ran at, by our terms, relatively slow speeds, that was tolerable, “but now networks are faster by 400 times”, he said. “You can lose 99 out of 100 controllers and still be online.”

It’s a way, he said, to build increasingly scalable infrastructure, with no degradation in performance. You can have, he added, 10Gbps networks with thousands of end points.

An advantage, he added, is that they use a tenth of the power of a typical hard drive, “and a lot less space”. You can get 90 terabytes in a 2½ inch drive slot.

Who’s using them? The largest market sector is in finance, “people who think they’re gaming the system”, he smiled in a throwaway remark that might not go down well with some of his customers. “Most of them have in-house data centres.”

But there are other storage-intensive users, in life sciences, in government and among other users of large-scale, storageintensive computing. The European Bioinformatics Institute, he said, keeps its 200 petabytes of data mostly in tape, but it is “very pleased” with flash memory. “Booking.com is a customer,” he said. “Its initial order was 10 petabytes.”

AI is, as he suggested earlier in our conversation, a big focus for these huge memories. Investment company Ark Capital is predicting that storage opportunity for AI will be worth $400 billion by 2030. That’s a lot of brains.