Nightshade makes changes to the pixels of an image that are unseeable to the naked eye, but provides the AI with inaccurate data from which to infer what certain images should look like.

For example, a “poisoned” image of a cat would teach the AI to generate an image of a dog.

The researchers at University of Chicago are led by Professor Ben Zhao, and are also responsible for developing Glaze, a tool that allows artists to hide their personal style to prevent it from being used by AI models.

Zhao’s team tested Nightshade on Stable Diffusion’s latest models, an AI platform that claims to be able to generate photo-realistic images given any text input.

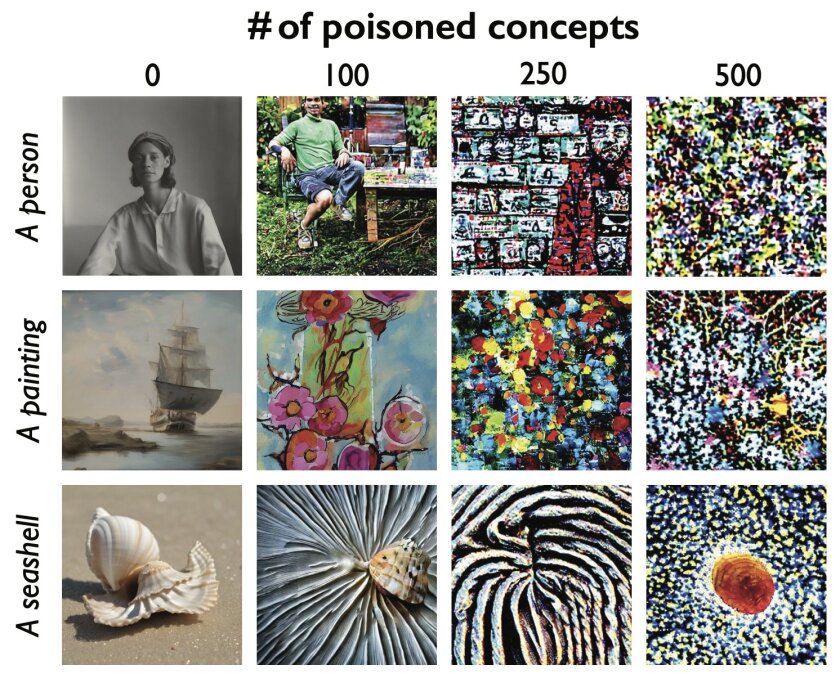

In combination with an AI model they trained themselves, the team found that after just 50 poisoned images, the models started to produce distorted images. To use our example of a dog, the AI starts to produce dogs with distorted limbs, or more cat like features.

After 300 poisoned samples, nightshade can trick the AI to generate an image of a cat when the prompt word was dog.

Due to the way that the AI models create associations between words, this poison spreads, to not just infect the prompt of dog, but words it associates with dog, such as puppy, labrador or fox.

“Why Nightshade? Because power asymmetry between AI companies and content owners is ridiculous. If you're a movie studio, gaming company, art gallery, or indep artist, the only thing you can do to avoid being sucked into a model is 1) opt-out lists, and 2) do-not-scrape directives” Glaze at UChicago said in a post on X (formerly twitter).

AI companies that have scraped artists' work for their models are facing lawsuits from creators, who were given no prior warning, no chance to consent and no royalties from their work to be used in the models.

Glaze acknowledges that companies like OpenAI have offered artists the opportunity to opt-out of their work being submitted to the models, but claim that these mechanics are not enforceable or verifiable, and place too much power in the hands of tech companies.

Glaze said the nightshade paper is public and anyone is free to read it, understand it, and do follow up research.

“It is a technique and can be used for applications in different domains. We hope it will be used ethically to disincentivise unauthorised data scraping, not for malicious attacks,” Glaze said in a follow up tweet.

“We don’t yet know of robust defences against these attacks. We haven’t yet seen poisoning attacks on modern [machine learning] models in the wild, but it could be just a matter of time. “The time to work on defences is now,” Vitaly Shmatikov, a professor at Cornell University who studies AI model security told MIT Technology Review.