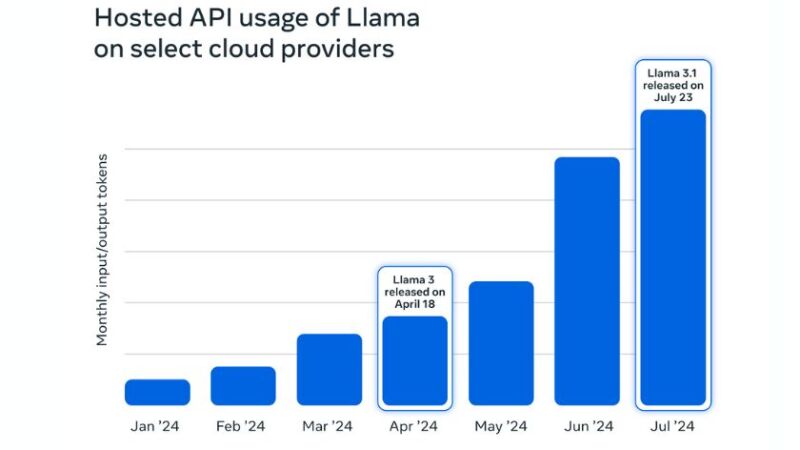

Figures from Meta suggest that demand for its AI models from cloud partners grew 10 times from January to July, with users hungry to access its mammoth Llama 3.1 model.

A company blog post stated that demand for its AI services is so great that the volume of tokens generated by Meta’s service provider partners more than doubled in just three months from May through July.

Meta said that downloads of its Llama models are nearing 350 million, more than 10 times the number of downloads this time last year.

Subscribe today for free

The latest generation of the AI model, Llama 3, dropped in April. Meta also added Llama 3.1 in late July, which is the largest open source model in history and is on par in terms of performance levels with foundation-level systems from OpenAI and Anthropic.

Demand for Meta's Llama models is not solely coming from hyperscalers such as AWS, Google Cloud, and Microsoft Azure, but also vendors including Dell, Scale AI, and Snowflake.

Databricks co-founder and CEO Ali Ghodsi said in the weeks following the launch of Llama 3.1, thousands of its customers deployed the model to become its fastest-adopted and best-selling open source model ever.

Meanwhile, Groq CEO Jonathan Ross said the startup “can’t add capacity fast enough” to support Meta’s Llama models

“If we 10x’d the deployed capacity it would be consumed in under 36 hours,” Ross suggested.

Meta said it has grown the number of partner firms with early access to the Llama model by five times and plans to do more to meet “surging demand from partners.”

Ahmad Al-Dahle, VP for generative AI at Meta, said the company has heard from users that want support for Llama to be extended to the likes of Wipro, Lambda, and Cerebras.

“Llama is leading the way on openness, modifiability, and cost efficiency,” wrote Al-Dahle. “We’re committed to building in the open and helping ensure that the benefits of AI extend to everyone.

Meta launched its Llama series of models some 18 months ago, releasing subsequent upgrades including Llama 2 last July.

As it’s open source, there’s no cost to access the model and a wide array of companies have sought to try the model to power their generative AI applications.

Among Meta’s AI users is AT&T, which is leveraging fine-tuning versions of the Llama models to augment its customer care teams, which helped provide a 33% improvement in search-related responses while reducing costs and speeding up response times.

AI is now the top line for Meta having completely shifted away from its founding concept of building the metaverse.

Meta CEO Mark Zuckerberg said in an open letter published in July that he wants Llama to become the “industry standard” AI model.

“ We’re building teams internally to enable as many developers and partners as possible to use Llama, and we’re actively building partnerships so that more companies in the ecosystem can offer unique functionality to their customers as well,” Zuckerberg wrote.

RELATED STORIES