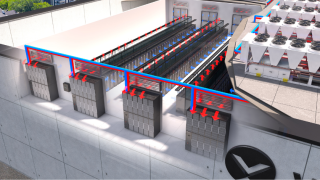

Unveiled at the company’s Advancing AI event in San Francisco, the Pensando Salina 400 and Pensando Pollara 400 are designed to enhance AI workload efficiency by improving networking routing to avoid traffic congestion.

Subscribe today for free

The devices are essentially designed to ensure AI workloads in data centres powered by vast arrays of GPUs operate at peak efficiency. The hardware can effectively manage network congestion to avoid performance degradation by re-routing workloads to avoid network failures and quickly recover from occasional packet loss.

Soni Jiandani, SVP and general manager of the network technology solutions group at AMD said in a press briefing that the new DPUs will help overcome network challenges brought on by AI workloads.

“Explosive new AI growth is putting new challenges on the network, requiring innovation at a much faster pace and infrastructure that has to be adaptable to meet with the growing needs of the traffic patterns of AI,” Jiandani said.

“It is absolutely critical to have a high-performing network along with a high-performing CPU, DPU, within an AI system. Our focus at AMD is to innovate in every aspect of the AI system and we believe that networking is not only critical, but it's foundational to drive optimal performance.”

AMD’s Salina 400 is designed for front-end networks. Featuring 16 N1 Arm cores, the DPU is designed for hyperscalers, enabling them to support intelligent load balances to utilise the complete bandwidth while minimising network congestion.

The new Polara 400 networking adapter, meanwhile, is designed for back-end networks.

Jiandani suggested that companies like Meta have demonstrated that on average, 30% of the elapsed training cycle time for AI workloads is spent waiting on the back-end network.

The new DPU optimises back-end networks, enabling performance to remain efficient during intense workloads such as AI training.

The Polara 400 is the first-ever adapter designed to support the UEC standard for AI and high-performance computing data centre interconnects. Developed by the Ultra Ethernet Consortium, it’s seen as an alternative to InfiniBand, an interconnect standard largely used by hardware rival Nvidia.

Jiandani told gathered press that the Ethernet-based standard can scale to millions of nodes, compared to the foundational architecture of InfiniBand which is not poised to scale beyond 48,000 nodes “without making dramatic and highly complex workarounds.”

The Polara 400 is also programmable, enabling it to support further UEC-developed standards from release.

“Selena 400 and the Polara 400 are solving the challenges for both front-end and back-end networks, including faster data ingestion, secure access, intelligent load balancing, congestion management and fast failover, and loss recovery,” Jiandani said.

Sitting at the heart of both of AMD’s new networking solutions is its P4 engine, a compact fully programmable unit designed to optimise network workloads.

The P4 is capable of supporting 400 gigabytes per second (Gb/s) line rate throughput while multiple services run concurrently on the device.

“[The P4] architecture puts AMD in a very unique position because we now have the ability to deliver a holistic portfolio that is future-proof and an end-to-end network solution through full programmability as the system will scale,” Jiandani said.

RELATED STORIES

AMD's new GPU lineup aims to rival Nvidia in data centre AI market

AMD unleashes next-gen AI processors to power enterprise PCs

AMD launches 5th gen EPYC CPUs to supercharge enterprise AI, cloud workloads