Cerebras plans to float its Class A common stock on the Nasdaq under the ticker symbol “CBRS.”

Subscribe today for free

The company did not disclose the number of shares or the proposed price range, saying they have “not yet been determined.”

Citigroup and Barclays are acting as lead book-running managers for the proposed offering, with UBS Investment Bank, Wells Fargo Securities, Mizuho, and TD Cowen also acting as book-running managers.

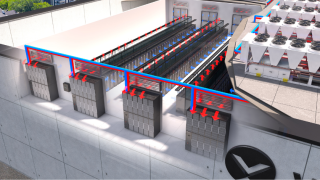

Based in Sunnyvale, California, Cerebras designed the Wafer Scale Engine (WSE) chips, mammoth hardware the size of dinner plates which are capable of running AI models at super-fast speeds.

While traditional GPUs contain around 200MB of on-chip memory, Cerebras’ WSE hardware contains 44GB of on-chip Static Random Access Memory (SRAM) — making them 57 times larger than Nvidia’s H100s.

The latest iteration, the WSE-3, is used to process AI workloads on Cerebras’ new inference solution. Launched in late August, enterprise users can run their AI models at speeds that surpass what hyperscalers offer, with the company claiming it can run Meta’s Llama 3.1 ome 20 times faster than traditional GPU-based hyperscale cloud solutions for one-fifth the price.

RELATED STORIES

AI startup's colossal chip powers 'world's fastest' AI processing

Meta unveils Llama 3.2: Smaller AI models for edge and mobile devices