Jensen Huang, founder and CEO of Nvidia kick-started the Consumer Electronics Show (CES) 2025 with a keynote filled with product showcases as the company looks to continue its impressive momentum from 2024.

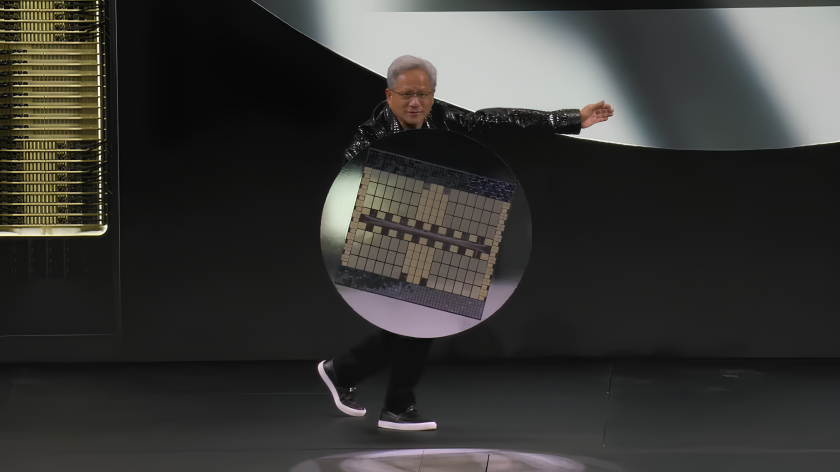

Among the chief showcases was a better look at the GB200 NVL72, a data centre super chip made up of 72 Blackwell GPUs, held aloft by Huang as if he were Marvel’s Captain America, as well as an AI-powered tools and Nim microservices for content creation, and Blueprints for helping businesses build agentic AI applications.

Subscribe today for free

Test and build agentic AI solutions

During his CES keynote, Huang unveiled new Blueprint tools businesses can use to build AI agent systems to automate applications.

Designed to act like “knowledge robots,” the AI agents created using the tools can analyse large quantities of data, and summarise and distil insights from sources like video and images in real-time.

Nvidia worked with AI software development organisations like LangChain, LlamaIndex, and CrewAI to build blueprints which integrate Nvidia’s AI Enterprise software solutions into their platforms to help users build agentic AI applications.

Among the Blueprints includes CrewAI’s tool used for code documentation for software development, which ensures code repositories remain comprehensive and easy to navigate, and LangChain’s structured report generation offering which helps businesses better help agents search the web for specific information and then compile into a customisable report.

Developers can also build and run the new agentic AI Blueprints as Launchables, providing on-demand access to their preferred developer environments, and enabling quick workflow setup.

One such Blueprint is Llama Nemotron, which developers can use to build AI agents for business-oriented tasks like customer support, fraud detection, and supply chain optimisation.

During his keynote, Huang suggested the Blueprints could pave the way for the next generation of AI: physical interactions, or robots, saying: “All of the enabling technologies that I’ve been talking about are going to make it possible for us in the next several years to see very rapid breakthroughs, surprising breakthroughs, in general robotics.”

“We’re creating a whole bunch of blueprints that our ecosystem could take advantage of,” Huang said. “All of this is completely open source, so you could take it and modify the blueprints.”

Media2 stack brings AI power to broadcasters

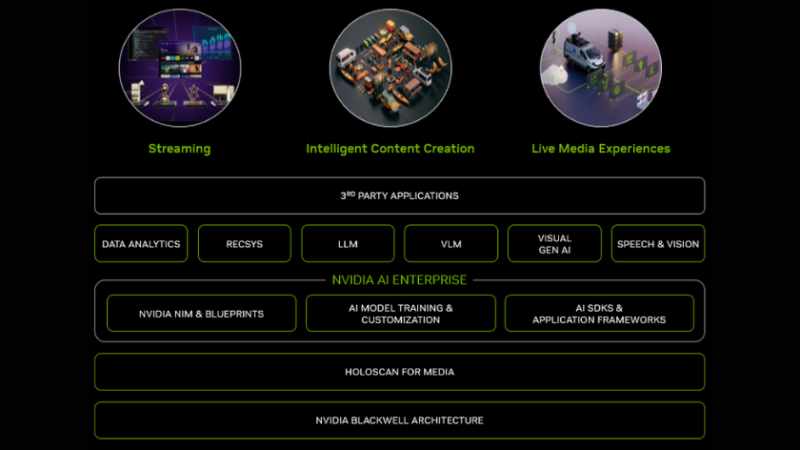

Also unveiled during the CES keynote was Media2, an AI-powered solution designed to improve content creation, streaming and live media experiences.

Built atop Nvidia’s Nim microservices and AI Blueprints, it provides users with access to a swathe of AI-powered tools to help with content creation and streaming.

Media2 is the latest AI-powered initiative transforming content creation, streaming and live media experiences.

The stack features tools like Holoscan for Media, a software platform that lets companies in broadcast, streaming and live sports run live video pipelines on the same infrastructure as AI.

Among the companies working with Nvidia on related innovations is the Comcast-owned broadcaster Sky, which is testing Nvidia’s Nim microservices for uses like applying voice commands to create summaries during live sports events or TV shows.

Verizon is also working with Nvidia in this area, integrating its secure private 5G network with Nvidia’s AI platforms to power solutions at the edge.

Nvidia suggests that its new Media2 offering could help broadcasters and rightsholders enhance viewer experiences by deploying 5G connectivity along with generative AI, agentic AI, and extended reality to offer personalised content to users.

GPU shield signals shift from generative to ‘physical AI’

While Nvidia didn’t unveil any new data centre hardware (save that for GTC or Computex), Huang used a ‘show and tell’ segment to showcase the company’s NVLink, which interconnects chips to improve performance.

Holding aloft a GPU shield made up of 72 Blackwell GPUs, the circular hardware unit was capable of delivering 1.4 exaFLOPS of compute — the power to perform well over a billion billion calculations every second, equivalent to the combined processing power of hundreds of thousands of modern smartphones.

Huang used the segment to put into perspective how far innovation has come, describing AI alone as having been “advancing at an incredible pace”.

He suggested that innovation to improve the power of GPUs would enable what he considers the next generation of innovation: Robotics.

“It started with perception AI — understanding images, words, and sounds. Then generative AI — creating text, images and sound,” Huang said. “Now, we’re entering the era of “physical AI, AI that can proceed, reason, plan and act.”

“The ChatGPT moment for general robotics is just around the corner,” Huang said, as Nvidia also unveiled news solutions aimed at that next generation of innovation, including Cosmos, a world foundation model platform designed to help robotics developers compile expertise and resources to train units.

RELATED STORIES

Nvidia closes Run:ai deal & plans to open source its AI infrastructure software

Verizon, Nvidia unveil AI-powered private 5G platform for enterprises